The “2023 AI-Generated Code Security Report” by Snyk sheds light on a pressing issue in the tech world: the security implications of AI-generated code.

While AI coding tools revolutionize how we develop software, speeding up processes and breaking new ground, they also bring a host of cybersecurity challenges that are often overlooked.

This report is a deep dive into the complexities of AI in the software industry. It reveals a stark contrast between the shiny allure of AI efficiency and the hidden dangers lurking beneath.

As developers, we're at the forefront of this transformation, but are we prepared for the consequences?

The Hidden Dangers of AI Coding Tools

Despite their rapid adoption and perceived efficiency, AI coding tools pose significant security risks.

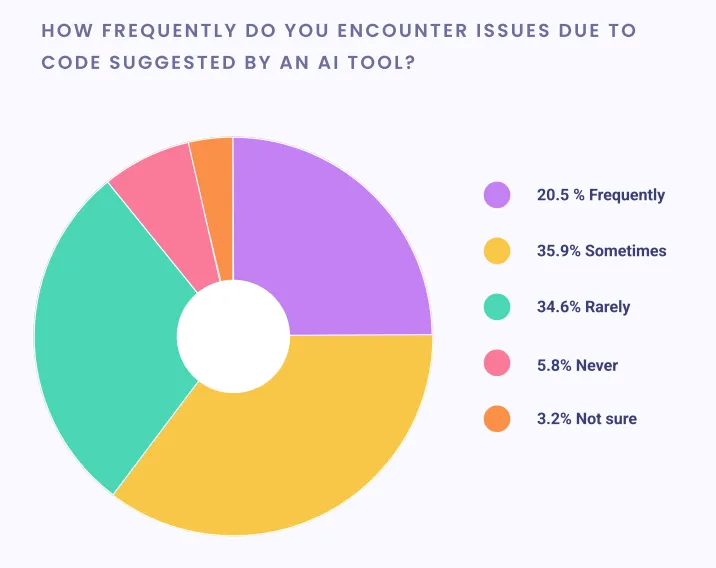

A substantial 56.4% of developers report encountering security issues with AI-generated code, yet few have adjusted their security processes accordingly.

Irony of Awareness Yet Inaction

Developers are not oblivious to these risks.

They understand the dangers of AI and the importance of responsible usage.

However, there is a striking gap between this awareness and the actual implementation of security measures.

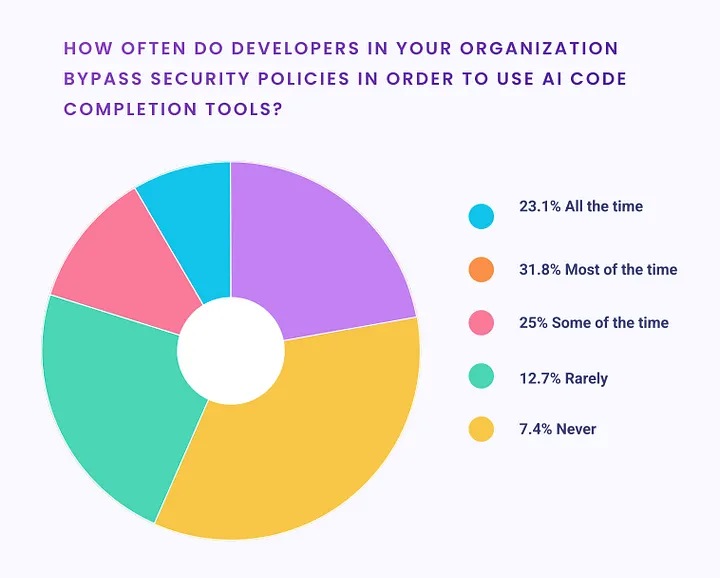

Only a minor fraction of teams have automated their security checks, and a staggering 80% admit to bypassing AI security policies.

AI Tools and Open Source Vulnerabilities

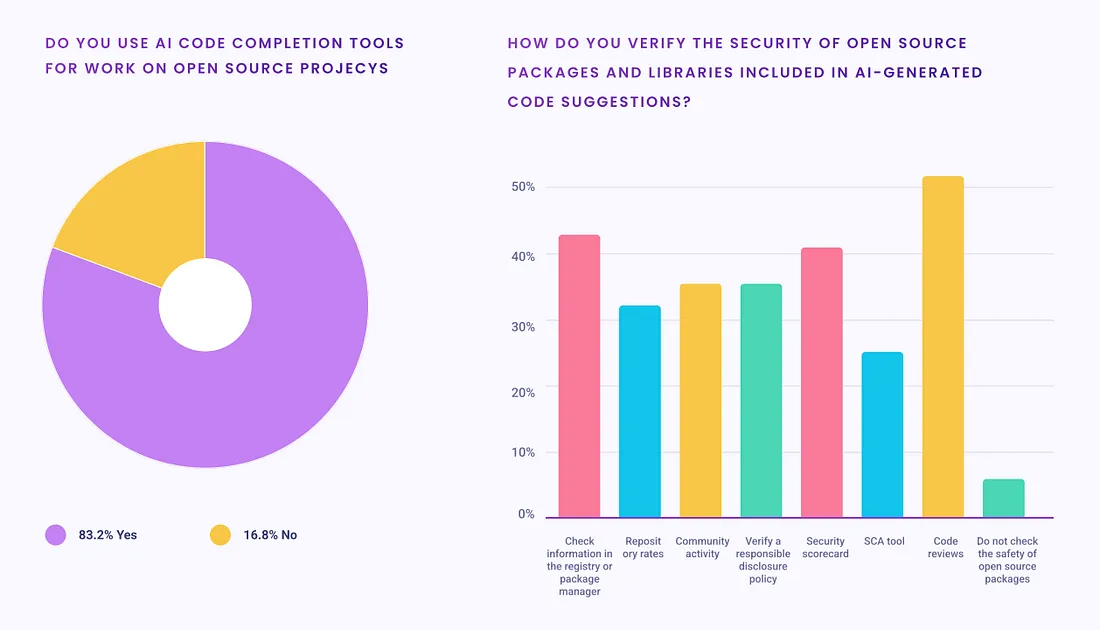

The situation is particularly concerning in the realm of open source code.

AI tools accelerate the adoption of open source components without adequate security validation, leading to a feedback loop of insecurity.

Stanford University research highlights this problem, with AI tools often recommending insecure open source libraries.

In the Stanford study, which used an AI coding model tuned specifically for computer code, for coders writing an encryption function, the AI tool consistently recommended open source libraries that explicitly stated in their own documentation they were insecure and not suitable for high security use cases. Worse, in the Stanford study, developers believed AI suggestions made their code more secure even if it actually wasn't.

Cognitive Dissonance: Trust vs Reality

There is a cognitive dissonance within the tech community.

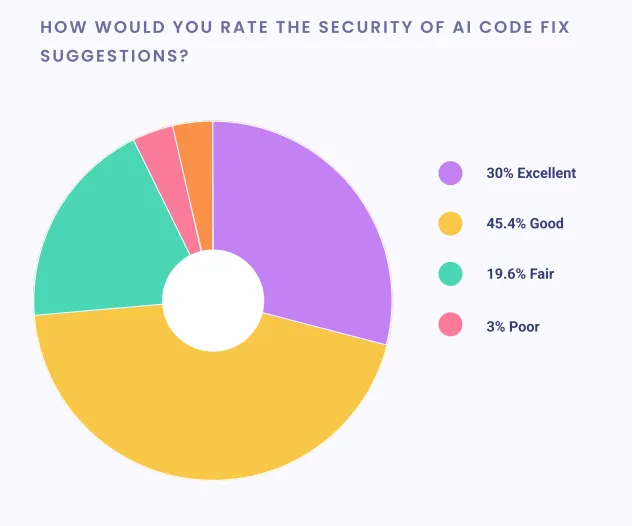

While 75.4% rate AI code fix suggestions as good or excellent, the reality of frequent security lapses tells a different story.

This overestimation of AI tool security is a significant concern for application security.

Organizational Complacency

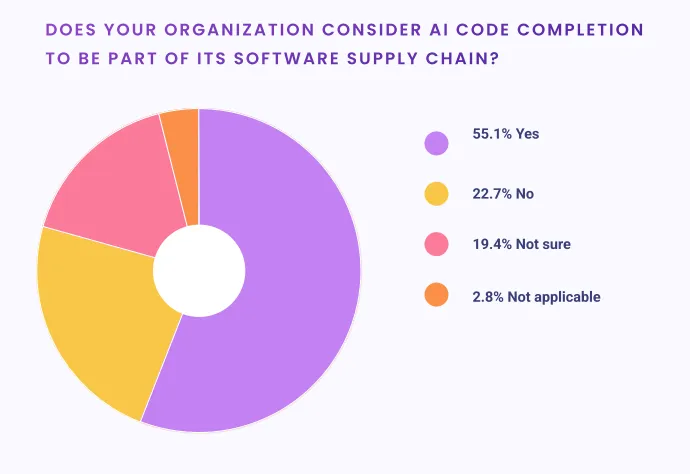

A majority (55.1%) now view AI code completion as a part of their software supply chain, yet this hasn't translated into meaningful changes in security practices.

The Underlying Issues and Solutions

- Recognizing AI Blindness: Developers worry about over-reliance on AI tools, which could lead to a deterioration in coding skills and an inability to recognize non-pattern solutions.

- AppSec Teams Under Pressure: More than half of AppSec teams struggle to keep up with the pace of AI-driven code production, indicating the need for more robust security processes.

- Educational Imperative: The key to resolving the AI infallibility bias lies in education and the implementation of secure, industry-approved tools. Organizations must educate their teams about the risks associated with AI tools and ensure the use of reliable security measures.

Conclusion: A Call for Vigilance and Action

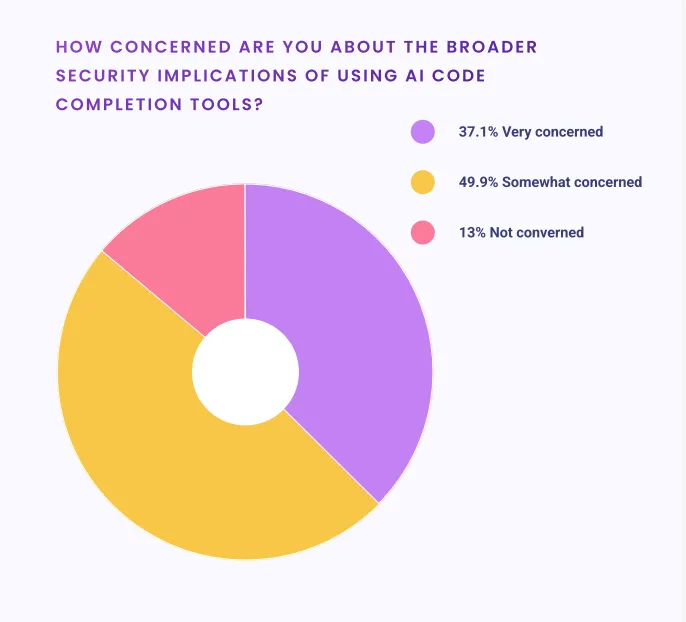

87% Are Concerned About AI Security

The “2023 AI-Generated Code Security Report” by Snyk paints a concerning picture of the cybersecurity landscape in AI-generated code.

While AI tools offer unprecedented efficiency, they introduce significant risks that are often underestimated.

The tech community must address this disconnect between perception and reality by enhancing education, implementing stringent security measures, and fostering a culture of vigilance and responsibility.

Only then can we harness the full potential of AI coding tools without compromising our digital security.

To download the report, click here.